AI is reshaping our world. But, many corporations wrestle with excessive prices, gradual efficiency, and complexity in AI improvement.

Combining Golang’s pace and ease with giant language fashions’ human-like language understanding and good automation gives a promising solution to reduce improvement time and decrease bills.

Learn on to find how this highly effective combine can drive scalable, environment friendly AI innovation for your small business.

Why Golang with LLMs is the Most well-liked Alternative for Companies

Golang for AI improvement is an environment friendly programming language that meets giant language mannequin necessities nicely. You’ll be able to hire dedicated Golang developers in India to construct high-performance AI purposes utilizing GO with LLMs.

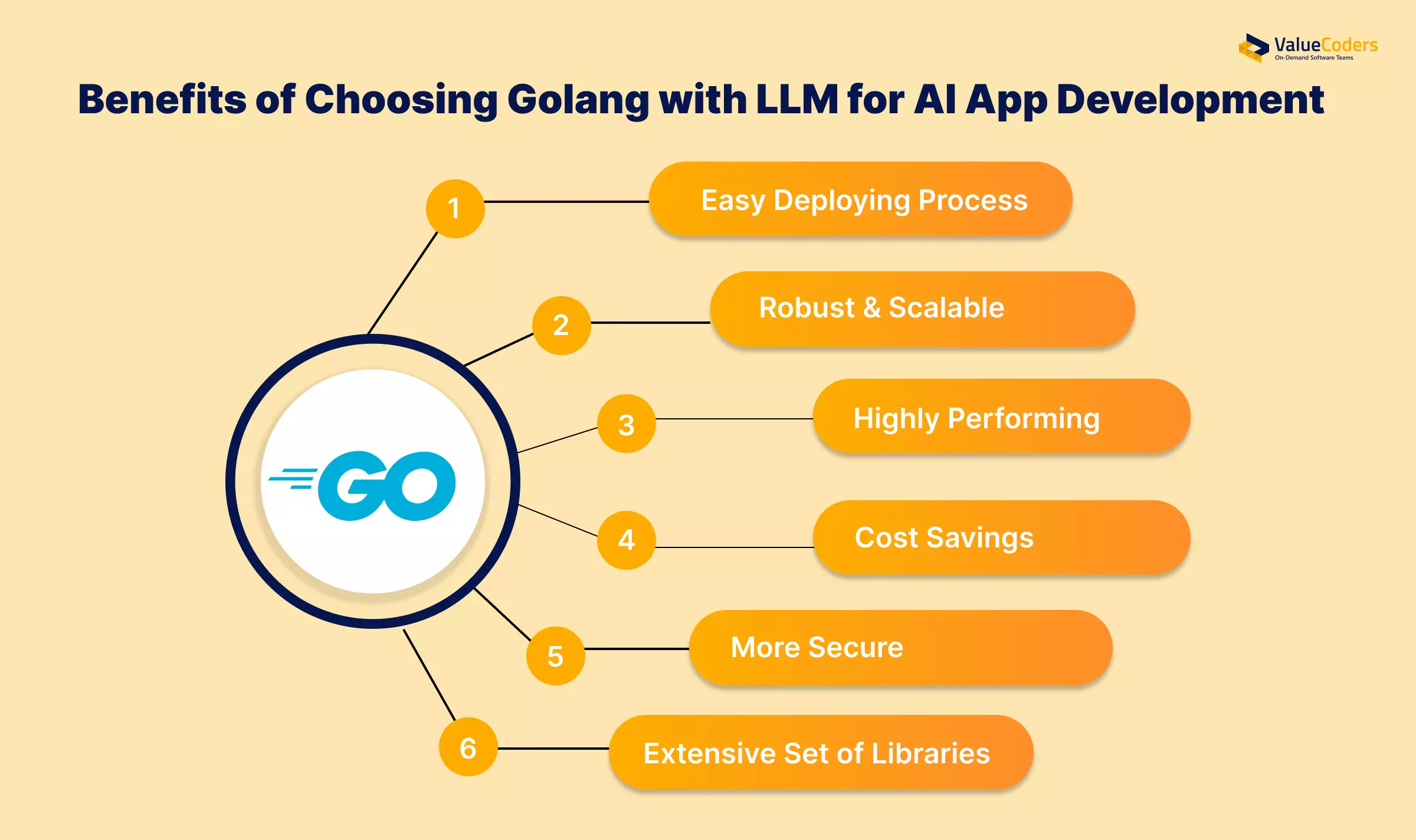

Right here is why Golang is the popular alternative for AI purposes:

Straightforward Deploying Course of

Golang for AI simplifies deployment by producing statically linked binaries that run on a number of platforms with out further dependencies. This makes it simpler to deploy LLM-based purposes on gadgets like

- Servers

- Edge methods

- Cloud environments

Minimal runtime necessities additionally scale back setup complexity and enhance deployment pace. These options enable companies to deploy sturdy purposes whereas maintaining useful resource utilization low.

Strong & Scalable

Golang is a powerful alternative for constructing purposes that should develop with demand. Its help for microservices permits builders to interrupt down functionalities into smaller, manageable parts the place every factor can function and develop independently, equivalent to

- Sentiment evaluation

- Advice engines

Go additionally integrates seamlessly with instruments like Kubernetes and Docker, simplifying deployment in containerized environments. For instance, a content material advice system can use separate microservices to deal with consumer preferences and scale them as wanted.

Extremely Performing

Golang is appropriate for creating server-side apps and gives excessive efficiency. It contains computational workloads. Its compiled construction ensures speedy execution, making it a superb alternative for purposes that use LLM development services.

With options like rubbish assortment and a low reminiscence footprint, Go helps LLM-based purposes that require fast processing and minimal overhead. These attributes make it superb for distributed methods and serverless deployments.

Value Financial savings

Attributable to its clear syntax and normal library, Golang for AI helps sooner coding and lowered improvement time. These options assist builders construct purposes shortly, lowering the time wanted to convey merchandise to market.

Its light-weight nature additionally helps preserve computational sources, lowering infrastructure prices. Go’s concurrency mannequin permits purposes to handle a number of duties effectively, avoiding overspending on further {hardware} or companies.

Extra Safe

Go prioritizes safe software improvement with options like a powerful sort system and reminiscence security. These protections assist forestall points equivalent to reminiscence leaks and buffer overflows, lowering vulnerabilities that attackers may exploit.

The language encourages safe coding practices, equivalent to enter validation and error dealing with, to attenuate dangers like injection assaults. Moreover, Go’s built-in dependency administration instruments assist be sure that third-party libraries don’t introduce pointless dangers.

In depth Set of Libraries

Golang for AI gives a wealthy library and framework ecosystem that enhances its means to construct AI purposes. Libraries equivalent to Echo and Gin make organising RESTful APIs, middleware, and different important instruments straightforward.

- Gonum and Go-Torch allow superior computations

- GoNLP and spaCY assist with sentiment evaluation and textual content classification

Go helps integration with fashionable frameworks like PyTorch and TensorFlow for machine studying and pure language processing duties.

Deal with Massive AI Workloads with out Efficiency Points

We use Golang’s concurrency and LLM’s intelligence to create high-speed, dependable AI-driven options.

High Challenges in Golang-LLM Integration

Whereas Go and LLM collectively are a robust mixture for AI improvement, some particular points that happen whereas integrating them are listed beneath:

- Dependency on Exterior Fashions: LLMs typically depend on pre-trained fashions which will require third-party instruments or APIs. This finally will increase dependencies.

- GPU Integration: Utilizing GPUs for LLM coaching inference with the Go language will be complicated as a result of restricted native help.

- Debugging Advanced Programs: Troubleshooting purposes that mix the concurrency mannequin of Go along with operations in LLM will be difficult.

- Information Administration: For the sleek circulation of operations, you will need to be sure that enter knowledge is formatted appropriately and outputs are processed effectively

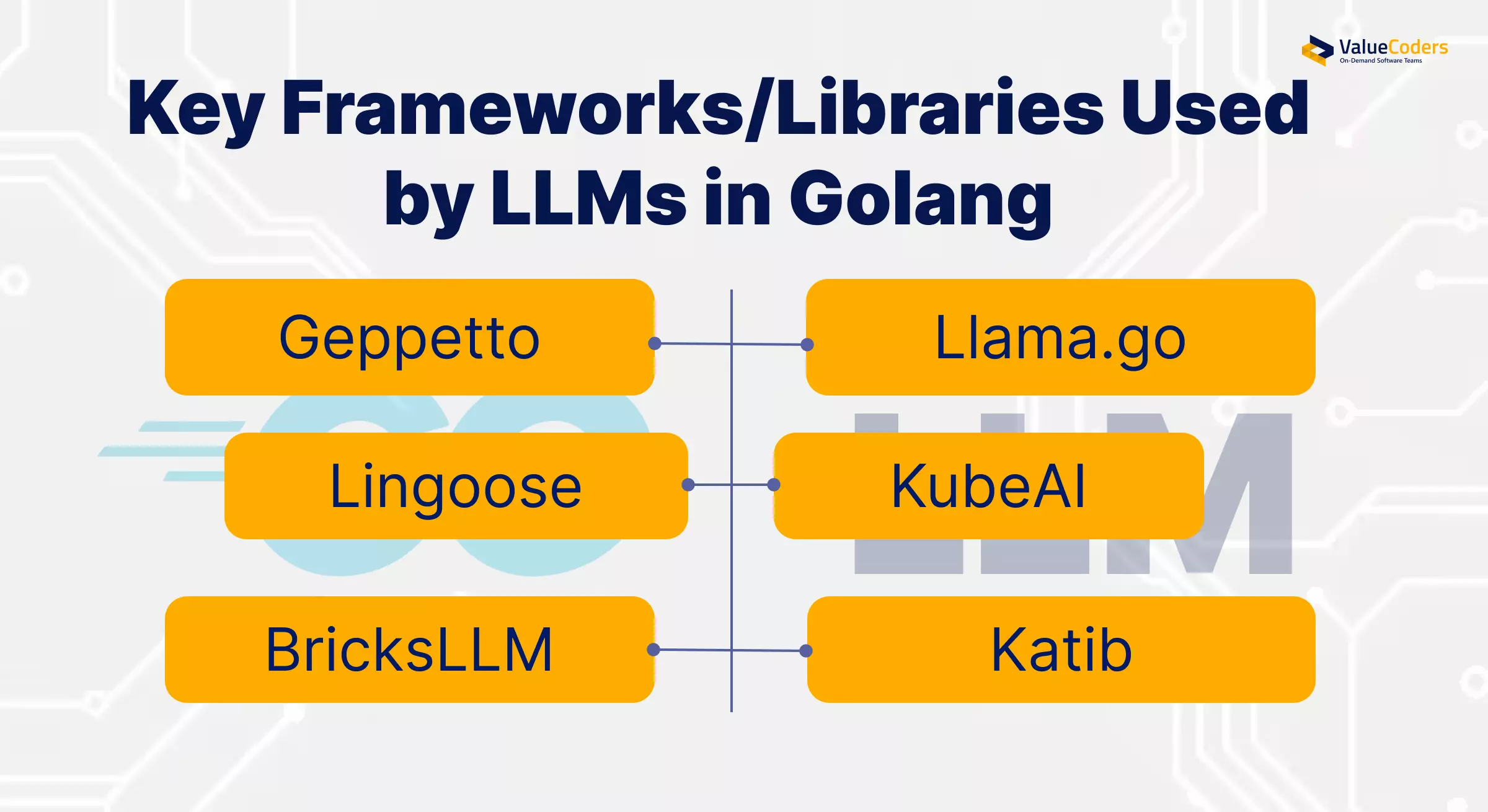

High Libraries and Frameworks for Go-LLM Integration

A number of highly effective libraries and Golang AI frameworks assist combine Massive Language Fashions (LLMs) with Golang for AI improvement. These allow seamless processing of textual content, automation, and AI-driven purposes.

- Geppetto: Helps construct purposes with declarative chains for complicated language duties.

- Llama.go: A Go implementation for working LLMs domestically, appropriate for experimentation and manufacturing.

- BricksLLM: Gives monitoring instruments, entry management, and help for open-source and industrial LLM in AI purposes.

- Lingoose: Supplies a versatile framework for creating LLM-powered purposes with minimal effort.

- KubeAI: Optimized for Kubernetes environments, streamlining LLM deployment in containerized setups.

- Katib: Focuses on automating machine studying workflows, together with hyperparameter tuning for LLMs.

Additionally learn: Choosing The Right LLM: What Your Business Needs

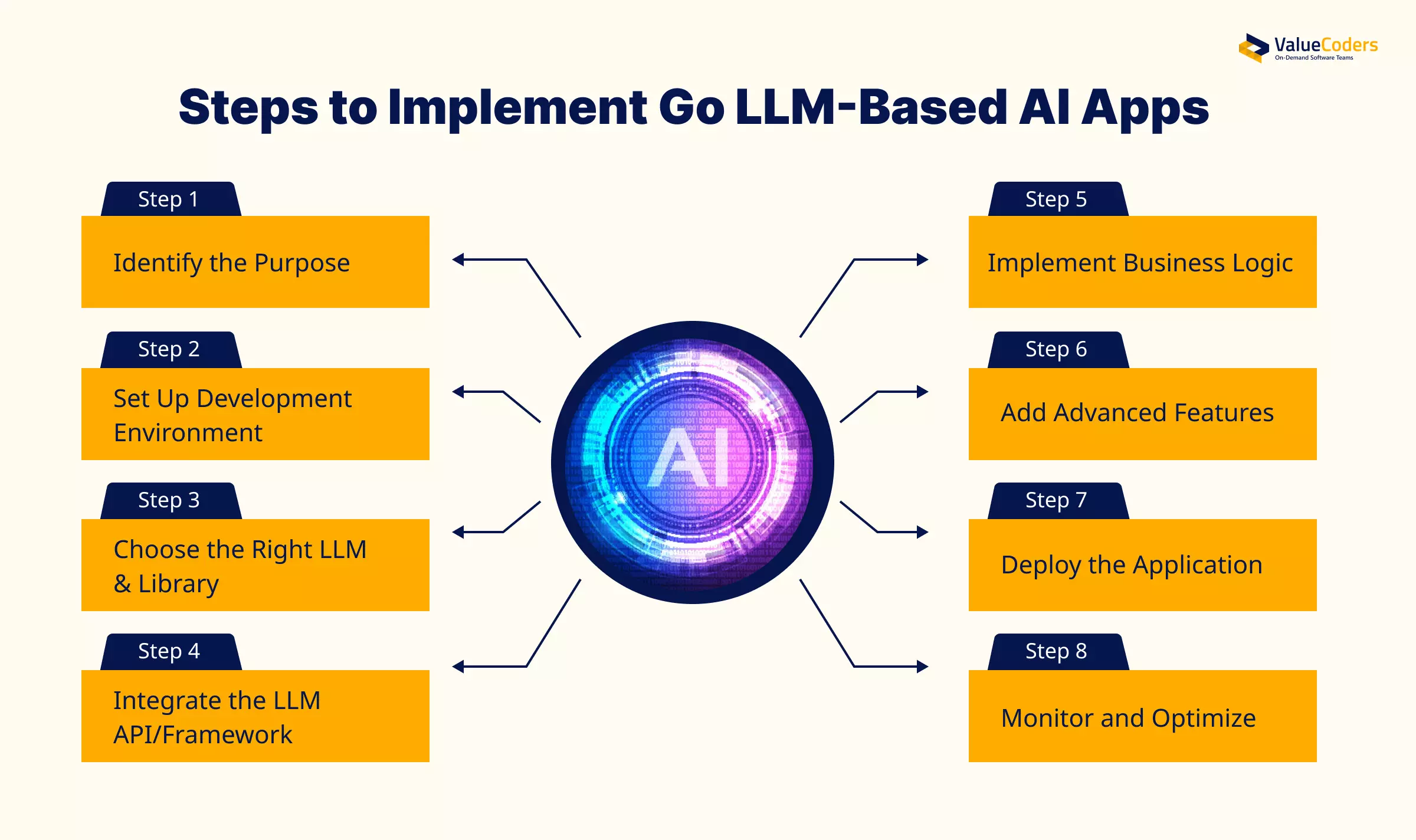

8 Steps to Construct a Golang LLM-Primarily based AI Utility

An AI services company develops AI purposes powered by LLMs utilizing the Go programming language. The builders use the next steps to simplify the mixing course of:

Step 1: Establish the Objective

Defining your function earlier than beginning the event technique of integrating Go and LLM-based purposes is important. For this, it is advisable to clearly outline the use instances and ensure to make use of the proper LLM fashions/supporting instruments.

You should decide the areas for which you want chatbots, as listed beneath:

- Doc summarization

- Customer support

- Sentiment evaluation

- Textual content classification

- Content material era

Step 2: Set Up Improvement Setting

After figuring out the aim of this improvement or integration, you will need to first set up GO in your system and attempt to use its newest model. To arrange this improvement atmosphere, you possibly can comply with these steps:

- Obtain the most recent model of Go from Golang.org

- Configure your atmosphere variables like GOROOT, GOPATH

- Make the most of a package deal supervisor like GoMod

Step 3: Select the Proper LLM & Library

The third step is to decide on the suitable giant language mannequin and libraries on your app. This includes:

- Libraries like Geppetto (for chaining prompts), Llama.go (for working fashions domestically)

- Use OpenAI Go SDK for fashions like GPT-4

- Combine Hugging face fashions utilizing gRPC endpoints or Go-based APIs

Step 4: Combine the LLM API/Framework

You can begin this by organising your Golang LLM app with API keys (OpenAI, retailer keys in.env or configuration information) and add the mandatory Go packages.

For Instance:

package deal predominant

import (

“context”

“fmt”

“log”

“github.com/sashabaranov/go-openai”

)

func predominant() {

// Initialize OpenAI shopper together with your API key

shopper := openai.NewClient(“YOUR_API_KEY”)

// Create a chat completion request

request := openai.ChatCompletionRequest{

Mannequin: openai.GPT4, // Specify the mannequin you wish to use

Messages: []openai.ChatCompletionMessage{

{Position: openai.ChatMessageRoleUser, Content material: “Clarify the advantages of utilizing Golang for AI improvement.”},

},

}

// Ship the request and seize the response

response, err := shopper.CreateChatCompletion(context.Background(), request)

if err != nil {

log.Fatalf(“Error creating chat completion: %v”, err)

}

// Print the response message content material

fmt.Println(“Response from LLM:”)

fmt.Println(response.Decisions[0].Message.Content material)

}

Learn how to Run?

Now, execute the next steps to run the above program. If it appears to technical to do carry out by yourself, contemplate hiring prime LLM builders:

1. Substitute “YOUR_API_KEY” together with your precise OpenAI API key.

2. Set up the go-openai package deal utilizing:

go get github.com/sashabaranov/go-openai

3. Run this system:

go run predominant.go

This code sends a immediate to the OpenAI GPT-4 mannequin and prints the response. You’ll be able to modify the message content material or mannequin as wanted.

Scuffling with AI Scalability? We Have the Answer

Golang’s light-weight framework and LLM’s adaptability guarantee seamless AI scalability.

Step 5: Implement Enterprise Logic

It’s the proper time to construct your app’s core performance. You are able to do so through the use of AI consulting companies and modular companies like:

- Enter pre-processing

- Immediate era

- Output formatting

Furthermore, using concurrency options will allow you to handle a number of consumer requests effectively. It additionally integrates APIs for duties like knowledge processing and retrieval.

Step 6: Add Superior Options

You’ll be able to improve your software with further capabilities, equivalent to:

- Consumer interactions

- Analytics

- Customized fashions

- Analyze mannequin efficiency

Step 7: Deploy the Utility

It’s time to deploy your Go-based LLM app for the market launch. You’ll be able to launch it by including Docker to your package deal for constant deployment and internet hosting it on Google Cloud, AWS, and Azure with Kubernetes for scaling.

Moreover, you can even go for Google Cloud Capabilities for value effectivity.

Step 8: Monitor and Optimize

You should repeatedly monitor and enhance your software through the use of instruments like Grafana and Prometheus to watch and optimize prompts, in addition to API calls to lower latency and repeatedly replace fashions and libraries for the most recent development.

Additionally learn: How Enterprises Can Leverage Large Language Models?

Actual-World Purposes of Golang-LLM Integration

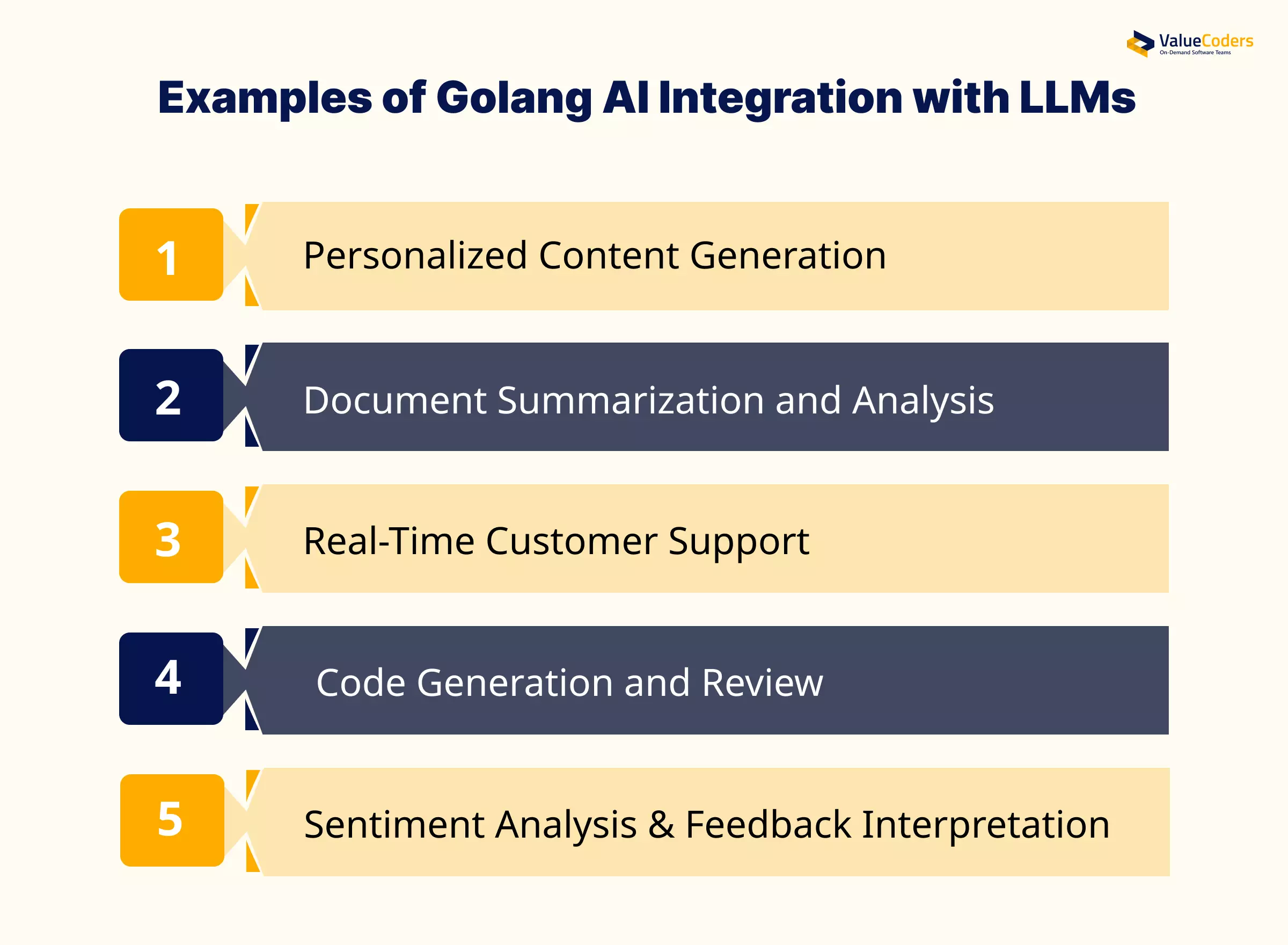

A number of enterprises use Golang and LLMs to construct superior AI purposes, from chatbots to automated content material evaluation. Let’s discover among the well-known examples of Golang AI integration utilizing LLMs:

Personalised Content material Era

Go-based purposes powered by LLMs can generate emails, weblog posts, and product descriptions primarily based on consumer preferences. It’s key options embrace:

- Integration with different LLM APIs by way of Go’s HTTP shoppers and JSON dealing with

- Context-aware content material creation utilizing consumer preferences and historic knowledge saved in Go constructions

- Price limiting and request optimization utilizing Go’s concurrency options like goroutines and channels

- Template-based era with Go’s textual content/template package deal mixed with LLM outputs

- Error dealing with and fallback mechanisms for dependable content material supply

Doc Summarization and Evaluation

Enterprises use these instruments to condense prolonged paperwork, making it simpler to course of info shortly. It’s key options embrace:

- PDF and doc parsing utilizing Go libraries like pdfcpu or UniDoc

- Chunking giant paperwork into processable segments utilizing Go’s string manipulation

- Parallel processing of doc sections utilizing Go’s concurrent options

- Metadata extraction and group utilizing Go structs

- Integration with vector databases like Milvus or Weaviate for semantic search capabilities

Actual-Time Buyer Help

Golang and LLM-based chatbots deal with 1000’s of buyer queries concurrently, bettering response instances. It’s key options embrace:

- WebSocket implementations for stay chat performance utilizing gorilla/websocket

- Queue administration for incoming requests utilizing Go channels

- Context preservation throughout conversations utilizing Go’s context package deal

- Integration with current buyer databases and CRM systems

- Automated response era with failover to human help

- Response caching and optimization utilizing Go’s sync package deal

Code Era and Assessment

Builders depend on LLMs to automate repetitive coding duties and evaluation code for potential enhancements. It’s key options embrace:

- Summary Syntax Tree (AST) parsing utilizing Go’s built-in parser package deal

- Integration with model management methods utilizing Go-git

- Sample matching and code evaluation utilizing common expressions

- Concurrent processing of a number of code information

- Static code evaluation integration with LLM recommendations

- Customized rule enforcement and elegance checking

Sentiment Evaluation & Suggestions Interpretation

Go purposes analyze buyer opinions and social media feedback to gauge public sentiment and determine areas of enchancment. It’s key options embrace:

- Batch processing of suggestions knowledge utilizing Go’s concurrent options

- Integration with SQL databases for storing and analyzing suggestions traits

- Actual-time sentiment scoring utilizing Go’s environment friendly processing

- Statistical evaluation utilizing Go’s math packages

- Customized categorization and tagging methods

- Aggregation of sentiment knowledge throughout a number of sources

Enhance AI Mannequin Processing Speeds by 30%

We use Golang’s effectivity and LLM’s energy to speed up AI computations with out lag.

Way forward for Golang and LLM in AI Purposes

The mix of Go and LLM will play an important position in shaping the way forward for AI. Go’s effectivity and LLM’s capabilities will help developments in real-time purposes, IoT integration, and on-device AI.

With rising issues about knowledge safety, Go’s built-in security options make it a perfect alternative for AI initiatives that deal with delicate info. Moreover, as industries demand smarter purposes, this partnership will proceed driving innovation in AI.

Additionally learn: Top 10 LLM Development Companies In 2025

Conclusion

Golang and LLM collectively are redefining fashionable AI improvement by offering a singular mixture of scalability, pace and effectivity. By using Golang’s concurrency mannequin and LLM’s superior language capabilities, companies can develop clever apps.

Whether or not for content material creation, buyer help, or analytics, this duo is all set to form the way forward for AI-powered options.

Convey your AI imaginative and prescient to life with ValueCoders. Our software program groups guarantee a seamless transition from idea to deployment.

We concentrate on integrating Golang and LLM with AI to construct high-performance apps. Our method contains custom AI development, steady optimization, scalable structure, and seamless integration.